Volcano

Volcano

An Extensible and Parallel Query Evaluation System

Volcano-一个可扩展的并行查询评估系统

Abstract-To investigate the interactions parallelism in database query processing, we have developed a new dataflow query execution system called Volcano. The Volcano effort provides a rich environment for research and education in database systems design, heuristics for query optimization, parallel query execution, and resource allocation.

摘要:为了研究数据库查询处理中可扩展性和并行性的相互作用,我们开发了一种新的数据流查询执行系统,称为 Volcano。 Volcano 的工作为数据库系统设计、查询优化启发式、并行查询执行和资源分配方面的研究和教育提供了丰富的环境。

Volcano uses a standard interface between algebra operators, allowing easy addition of new operators and operator implementations. Operations on individual items, e.g., predicates, are imported into the query processing operators using support functions. The semantics of support functions is not prescribed; any data type including complex objects and any operation can be realized. Thus, Volcano is extensible with new operators, algorithms, data types, and type-specific methods.

Volcano 在代数运算符之间使用标准接口,允许轻松添加新运算符和运算符实现。 使用支持函数将对单个项目(例如谓词)的操作导入到查询处理运算符中。 支持函数的语义没有规定; 可以实现包括复杂对象在内的任何数据类型和任何操作。 因此,Volcano 可以通过新的运算符、算法、数据类型和特定于类型的方法进行扩展。

Volcano includes two novel meta-operators. The choose-plan meta-operator supports dynamic query evaluation plans that al- low delaying selected optimization decisions until run-time, e.g., for embedded queries with free variables. The exchange meta-operator supports intra-operator parallelism on parti- tioned datasets and both vertical and horizontal inter-operator parallelism, translating between demand-driven dataflow within processes and data-driven dataflow between processes.

Volcano 包括两个新颖的元运算符。 选择计划元运算符支持动态查询评估计划,允许将选定的优化决策延迟到运行时,例如,对于具有自由变量的嵌入式查询。 交换元操作符支持分区数据集上的操作符内并行性以及垂直和水平操作符间并行性,在进程内的需求驱动数据流和进程之间的数据驱动数据流之间进行转换。

All operators, with the exception of the exchange operator, have been designed and implemented in a single-process envi- ronment, and parallelized using the exchange operator. Even operators not yet designed can be parallelized using this new operator if they use and provide the interator interface. Thus, the issues of data manipulation and parallelism have become orthogonal, making Volcano the first implemented query exe- cution engine that effectively combines extensibility and parallelism.

除交换运算符外,所有运算符都是在单进程环境中设计和实现的,并使用交换运算符进行并行化。 即使尚未设计的运算符如果使用并提供交互器接口,也可以使用这个新运算符进行并行化。 因此,数据操作和并行性问题变得正交,使 Volcano 成为第一个实现的、有效结合可扩展性和并行性的查询执行引擎。

index Terms-Dynamic query evaluation plans, extensible database systems, iterators, operator model of parallelization, query execution.

索引术语-动态查询评估计划、可扩展数据库系统、迭代器、并行化操作模型、查询执行。

I. INTRODUCTION

IN ORDER to investigate the interactions of extensibil- ity, efficiency, and parallelism in database query pro- cessing and to provide a testbed for databse systems research and education, we have designed and implemented a new query evaluation system called Volcano. It is intended to provide an experimental vehicle for research into query execution techniques and query optimization optimization heuristics rather than a database system ready to support applications. it is not a complete database systern as it lacks features such as a user-friendly query language, a type system for instances (record definitions), a query optimizer, and catalogs. Because of this focus, Volcano is able to serve as an experimental vehicle for a multitude of purposes, all .of them openended, which results in a combination of requirements that have not been integrated in a single system before.

为了研究数据库查询处理中可扩展性、效率和并行性的相互作用,并为数据库系统研究和教育提供测试平台,我们设计并实现了一个名为 Volcano 的新查询评估系统。 它旨在提供一个用于研究查询执行技术和查询优化启发式的实验工具,而不是一个准备支持应用程序的数据库系统。它不是一个完整的数据库系统,因为它缺乏用户友好的查询语言、实例类型系统(记录定义)、查询优化器和目录等功能。 由于这一重点,Volcano 能够作为多种目的的实验工具,所有这些目的都是开放式的,从而产生了以前从未集成到单个系统中的需求组合。

First, it is modular and extensible to enable future research, e.g., on algorithms, data models, resource allocation, parallel execution, load balancing, and query optimization heuristics. Thus, Vol- cano provides an infrastructure for experimental research rather than a final research prototype in itself.

首先,它是模块化和可扩展的,可以支持未来的研究,例如算法、数据模型、资源分配、并行执行、负载平衡和查询优化启发法。 因此,Volcano 为实验研究提供了基础设施,而不是其本身的最终研究原型。

Second, it is simple in its design to allow student use and research. Modularity and simplicity are very important for this pur- pose because they allow students to begin working on projects without an understanding of the entire design and all its details, and they permit several concurrent student projects.

其次,它的设计简单,方便学生使用和研究。 模块化和简单性对于此目的非常重要,因为它们允许学生在不了解整个设计及其所有细节的情况下开始项目工作,并且允许多个并发的学生项目。

Third, Volcano’s design does not presume any particular data model; the only assumption is that query processing is based on transforming sets of items using parameterized operators. To achieve data model indepen- dence, the design very consistently separates set process- ing control (which is provided and inherent in the Vol- cano operators) from interpretation and manipulation of data items (which is imported into the operators, as de- scribed later).

第三,Volcano的设计没有假设任何特定的数据模型; 唯一的假设是查询处理基于使用参数化运算符转换项目集。 为了实现数据模型的独立性,设计非常一致地将集合处理控制(由 Volcano 运算符提供和固有的)与数据项的解释和操作(如所述导入到运算符中)分开。 之后)。

Fourth, to free algorithm design, imple- mentation, debugging, tuning, and initial experimentation from the intricacies of parallelism but to allow experi- mentation with parallel query processing. Volcano can be used as a single-process or as a parallel system. Single- process query evaluation plans can already be parallelized easily on shared-memory machines and soon also on dis- tributed-memory machines.

第四,将算法设计、实现、调试、调整和初始实验从复杂的并行性中解放出来,但允许进行并行查询处理的实验。 Volcano 可以用作单进程或并行系统。 单进程查询评估计划已经可以在共享内存机器上轻松并行化,并且很快也可以在分布式内存机器上并行化。

Fifth, Volcano is realistic in its query execution paradigm to ensure that students learn how query processing is really done in commercial data- base products. For example, using temporary files to transfer data from one operation to the next as suggested in most textbooks has a substantial performance penalty, and is therefore used in neither real database systems nor in Volcano.

第五,Volcano 的查询执行范例是现实的,以确保学生了解商业数据库产品中的查询处理是如何真正完成的。 例如,按照大多数教科书的建议,使用临时文件将数据从一个操作传输到下一个操作会带来很大的性能损失,因此既不在真正的数据库系统中使用,也不在 Volcano 中使用。

Finally, Volcano’s means for parallel query processing could not be based on existing models since all models explored to date have been designed with a particular data model and operator set in mind. Instead, our design goal was to make parallelism and data manip- ulation orthogonal, which means that the mechanisms for parallel query processing are independent of the operator set and semantics, and that all operators, including new ones, could be designed and implemented independently of future parallel execution.

最后,Volcano 的并行查询处理方法不能基于现有模型,因为迄今为止探索的所有模型都是在设计时考虑了特定的数据模型和运算符集。 相反,我们的设计目标是使并行性和数据操作正交,这意味着并行查询处理的机制独立于操作符集和语义,并且所有操作符,包括新操作符,都可以独立于操作符集和语义来设计和实现。 未来的并行执行。

Following a design principle well established in operating systems research but not exploited in most database system designs, Volcano provides mechanisms to support policies. Policies can be set by a human experimenter or by a query optimizer. The separation of mechanisms and policies has contributed to the extensibility and modular- ity of modern operating systems, and may make the same contribution to extensible database systems. We will re- turn to this separation repeatedly in this paper.

Volcano 遵循操作系统研究中确立但大多数数据库系统设计中未采用的设计原则,提供了支持策略的机制。 策略可以由人类实验者或查询优化器设置。 机制和策略的分离有助于现代操作系统的可扩展性和模块化,并且可能对可扩展数据库系统做出同样的贡献。 我们将在本文中反复讨论这种分离。

Since its very purpose is to allow future extensions and research, Volcano is continuously being modified and ex- tended. Among the most important recent extensions were the design and implementation of two meta-operators. Both of them are not only new operators but also embody and encapsulate new concepts for query processing. They are meta-operators since they do not contribute to data manipulation, selection, derivation, etc., but instead pro- vide additional control over query processing that cannot be provided by conventional operators like file scan, sort, and merge join. The choose-plan operator implements dynamic query evaluation plans, a concept developed for queries that must be optimized with incomplete informa- tion [ 171. For example, it is not possible to reliably op- timize an embedded query if one of the constants in the query predicate is actually a program variable and there- fore unknown during compilation and optimization. Dy- namic plans allow preparation for multiple equivalent plans, each one optimal for a certain range of actual pa- rameter values. The choose-plan operator selects among these plans at runtime while all other operators in Vol- cano’s operator set (present or future) are entirely oblivious to the presence and function of the choose-plan operator.

由于其目的是为了未来的扩展和研究,Volcano 正在不断地被修改和扩展。 最近最重要的扩展是两个元运算符的设计和实现。 它们不仅是新的运算符,而且体现和封装了查询处理的新概念。 它们是元运算符,因为它们不参与数据操作、选择、派生等,而是提供对查询处理的额外控制,这是文件扫描、排序和合并连接等传统运算符无法提供的。 选择计划运算符实现动态查询评估计划,这是一个为必须使用不完整信息进行优化的查询而开发的概念[171。例如,如果常量之一,则不可能可靠地优化嵌入式查询 查询谓词中的实际上是一个程序变量,因此在编译和优化期间是未知的。 动态计划允许准备多个等效计划,每个计划对于特定范围的实际参数值都是最佳的。 选择计划运算符在运行时在这些计划中进行选择,而 Volcano 运算符集中的所有其他运算符(当前或未来)完全不知道选择计划运算符的存在和功能。

The second meta-operator, the exchange operator, im- plements and controls parallel query evaluation in Vol- cano. While operators can exchange data without the ex- change operator, in fact within processes as easily as a single procedure call, this new operator exchanges data across process and processor boundaries. All other oper- ators are implemented and execute without regard to par- allelism; all parallelism issues like partitioning and flow control are encapsulated in and provided by the exchange operator. Thus, data manipulation and parallelism are in- deed orthogonal in Volcano [20]. Beyond the cleanliness from a software engineering point of view, it is also very encouraging to see that this method of parallelizing a query processing engine does indeed allow linear or near- linear speedup .

第二个元运算符,即交换运算符,在 Volcano 中实现和控制并行查询评估。 虽然操作符可以在没有交换操作符的情况下交换数据,实际上在进程内就像单个过程调用一样容易,但这个新操作符可以跨进程和处理器边界交换数据。 所有其他运算符的实现和执行均不考虑并行性; 所有并行问题(例如分区和流量控制)都封装在交换运算符中并由交换运算符提供。 因此,数据操作和并行性在 Volcano 中确实是正交的[20]。 除了从软件工程角度来看的简洁性之外,看到这种并行化查询处理引擎的方法确实允许线性或近线性加速也非常令人鼓舞。

This paper is a general overview describing the overaii goals and design principles. Other articles on Volcano were written on special aspects of the system, e.g., [16]- [21], [25], [26]. These articles also include experimental performance evaluations of Volcano’s techniques and algorithms, in particular [ 181, [2 11.

本文是描述总体目标和设计原则的总体概述。 关于 Volcano 的其他文章是关于系统的特殊方面的,例如 [16]-[21]、[25]、[26]。 这些文章还包括 Volcano 技术和算法的实验性能评估,特别是 [181, [2 11.

The present paper is organized as follows. In the following section, we briefly review previous work that inluenced Volcano’s design. A detailed description of Vol- cano follows in Section III. Section IV contains a discussion of extensibility in the system. Dynamic query evaluation plans and their implementation are described in Section V. Parallel processing encapsulated in the ex- change module is described in Section VI. Section VII contains a summary and our conclusions from this effort.

本文的结构如下。 在下一节中,我们简要回顾一下影响 Volcano 设计的先前工作。 第三节对 Volcano 进行了详细描述。 第四节讨论了系统的可扩展性。 动态查询评估计划及其实现在第五节中描述。封装在交换模块中的并行处理在第六节中描述。 第七节包含总结和我们从这项工作中得出的结论。

II. RELATED WORK

Since so many different systems have been developed to process large datesets efficiently, we only survey the systems that have significantly influenced the design of Volcano. Our work has been influenced most strongly by WiSS, GAMMA, and EXODUS. The Wisconsin Storage System (WiSS) [lo] is a record-oriented file system pro- viding heap files, B-tree and hash indexes, buffering, and scans with predicates. GAMMA [1l] is a software data- base machine running on a number of general-purpose CPU’s as a backend to a UNIX host machine. It was de- veloped on 17 VAX 11/750’s connected with each other and the VAX 11/750 host via a 80 Mb /s token ring. Eight GAMMA processors had a local disk device, accessed us- ing WiSS. The disks were accessible only locally, and update and selection operators used only these eight pro- cessors. The other, diskless processors were used for join processing. Recently, the GAMMA software has been ported to an Intel iPSC/2 hypercube with 32 nodes, each with a local disk drive. GAMMA uses hash-based algo- rithms extensively, implemented in such a way that each operator is executed on several (usually all) processors and the input stream for each operator is partitioned into disjoint sets according to a hash function.

由于已经开发了许多不同的系统来有效地处理大型数据集,因此我们只调查对 Volcano 设计有重大影响的系统。 我们的工作受 WiSS、GAMMA 和 EXODUS 的影响最为强烈。 威斯康星存储系统 (WiSS) [lo] 是一个面向记录的文件系统,提供堆文件、B 树和哈希索引、缓冲和谓词扫描。 GAMMA [1l] 是一个软件数据库机,运行在许多通用 CPU 上,作为 UNIX 主机的后端。 它是在 17 个 VAX 11/750 上开发的,这些 VAX 11/750 彼此之间以及 VAX 11/750 主机通过 80 Mb /s 令牌环连接。 八个 GAMMA 处理器有一个本地磁盘设备,可以使用 WiSS 进行访问。 磁盘只能在本地访问,更新和选择操作员仅使用这八个处理器。 其他无盘处理器用于连接处理。 最近,GAMMA 软件已被移植到具有 32 个节点的 Intel iPSC/2 hypercube,每个节点都有一个本地磁盘驱动器。 GAMMA 广泛使用基于散列的算法,其实现方式是每个运算符在多个(通常是所有)处理器上执行,并且每个运算符的输入流根据散列函数划分为不相交的集合。

The limited data model and extensibility of GAMMA led to the search for a more flexible but equally powerful query processing model. The operator design used in the GAMMA database machine software gives each operator control within its own process, leaving it to the network- ing and operating system software to synchronize multi- ple operators in producer-consumer relationships using flow-control mechanisms. This design, while working ex- tremely well in GAMMA, does not lend itself to single- process query evaluation since multiple loci of control, i.e., multiple operators, cannot be realized inside a single process without special pseudo-multiprocess mechanisms such as threads. Therefore, GAMMA’s operator and data transfer concepts are not suitable for an efficient query processing engine intended for both sequential and par- allel query execution.

GAMMA 有限的数据模型和可扩展性导致人们寻找更灵活但同样强大的查询处理模型。 GAMMA数据库机器软件中使用的操作员设计使每个操作员能够在自己的流程中进行控制,将其留给网络和操作系统软件使用流量控制机制来同步生产者-消费者关系中的多个操作员。 这种设计虽然在 GAMMA 中工作得非常好,但不适合单进程查询评估,因为如果没有特殊的伪多进程机制(例如线程),就无法在单个进程内实现多个控制点(即多个运算符)。 因此,GAMMA 的运算符和数据传输概念不适合用于顺序和并行查询执行的高效查询处理引擎。

EXODUS [7] is an extensible database system with some components followiong the “tool-kit” approach, e.g., the optimizer generator [ 131, [ 141 and the E database implementation language [27], [28], and other compo- nents built as powerful but fixed components, e.g., the storage manager [5]. Originally, EXODUS was conceived to be data-model-indepedent, i.e., it was supposed to support a wide variety of data models, but later a novel,powerful,structurally object-oriented data model called Extra was developed. The concept of data model independence as first explored in EXODUS has been retained in the Volcano project and the design and implementation of its software.During the design of the EXODUS storage manager, many storage and access issues explored in WiSS and GAMMA were revisited. Lessons learned and trade-offs explored in these discussions certainly helped in forming the ideas behind Volcano. The design and development of E influenced the strong emphasis on iterators for query processing.

EXODUS [7]是一个可扩展的数据库系统,具有一些遵循“工具包”方法的组件,例如优化器生成器[131,[141]和E数据库实现语言[27],[28]和其他组件 构建为功能强大但固定的组件,例如存储管理器 [5]。 最初,EXODUS 被认为是独立于数据模型的,即它应该支持多种数据模型,但后来开发了一种新颖的、强大的、结构面向对象的数据模型,称为 Extra。 EXODUS 中首次探索的数据模型独立性概念已保留在 Volcano 项目及其软件的设计和实现中。在 EXODUS 存储管理器的设计过程中,重新审视了 WiSS 和 GAMMA 中探索的许多存储和访问问题。 在这些讨论中吸取的经验教训和探索的权衡无疑有助于形成 Volcano 背后的想法。 E 的设计和开发影响了对用于查询处理的迭代器的强烈重视。

A number of further conventional (relational) and extensible systems have influenced our design. Ingres [32] and System R [9] have probably influenced most database systems, in particular their extensible follow-on projects Starburst [23] and Postgres [35]. It is interesting to note that independently of our work the Starburst group has also identified the demand-driven interator paradigm as a suitable basis for an extensible single-process query evaluation architecture after using it successfully in the System R relational system, but as yet has not been able to combine extensibility with parallelism. GENESIS [l] early on stressed the importance of uniform operator in- terfaces for extensibility and software reusability.

许多进一步的传统(关系)和可扩展系统影响了我们的设计。 Ingres [32] 和 System R [9] 可能影响了大多数数据库系统,特别是它们的可扩展后续项目 Starburst [23] 和 Postgres [35]。 有趣的是,独立于我们的工作,Starburst 小组在 System R 关系系统中成功使用需求驱动的交互器范例后,也将其确定为可扩展的单进程查询评估架构的合适基础,但迄今为止 无法将可扩展性与并行性结合起来。 GENESIS [l]很早就强调了统一操作员界面对于可扩展性和软件可重用性的重要性。

XPRS has been the first project aiming to combine extensibility with parallelism [34]. Its basic premise is to implement Postgres on top of RAID disk arrays and the Sprite operating system. XPRS and GAMMA basically differ in four ways. First, GAMMA supports. a purely relational data model while XPRS supports an extensible relational model, Postgres. Second, GAMMA’s main form of parallelism is intra-operator parallelism based on partitioned data sets. XPRS, on the other hand, will rely on bushy parallelism, i.e. , concurrent execution of different subtrees in a complex query evaluation plan. Fourth, GAMMA is built on the premise that distributed memory is required to achieve scalable linear speed-up while XPRS is being im- plemented on a shared-memory machine.

XPRS 是第一个旨在将可扩展性与并行性相结合的项目 [34]。 其基本前提是在 RAID 磁盘阵列和 Sprite 操作系统之上实现 Postgres。 XPRS 和 GAMMA 基本上有四个方面的不同。 首先,GAMMA支持。 纯粹的关系数据模型,而 XPRS 支持可扩展的关系模型 Postgres。 其次,GAMMA 的主要并行形式是基于分区数据集的算子内并行。 另一方面,XPRS 将依赖于密集并行性,即复杂查询评估计划中不同子树的并发执行。第四,GAMMA 的构建前提是需要分布式内存来实现可扩展的线性加速,而 XPRS 是在共享内存机器上实现的。

Both XPRS and Volcano combine parallelism and ex- tensibility, but XPRS is a far more comprehensive project than Volcano. In particular, XPRS includes a data model and a query optimizer. On the other hand, Volcano is more extensible precisely because it does not presume a data model. Therefore, Volcano could be used as the query processing engine in a parallel extensible-relational sys- tem such as XPRS. Moreover, it will eventually include a data-model-independent optimizer generator to form a complete query processing research environment.

XPRS 和 Volcano 都结合了并行性和可扩展性,但 XPRS 是一个比 Volcano 更全面的项目。 具体来说,XPRS 包括数据模型和查询优化器。 另一方面,Volcano 更具有可扩展性,正是因为它不假定数据模型。 因此,Volcano 可以用作并行可扩展关系系统(例如 XPRS)中的查询处理引擎。 而且,它最终将包括一个独立于数据模型的优化器生成器,以形成一个完整的查询处理研究环境。

III. VOLCANO SYSTEM DESIGN

In this section, we provide an overview of the design of Volcano. At the current time, Volcano is a library of about two dozen modules with a total of about 15 000 lines of C code. These modules include a file system, buffer management, sorting, B+-trees, and two algorithms each (sort- and hash-based) for natural join, semi-join, all three outer joins, anti-joint, aggregation, duplicate elimination, union, intersection, difference, anti-difference,and relational division. Moreover, two modules implement dynamic query evaluation plans and allow parallel processing of all algorithms listed above.

在本节中,我们概述了 Volcano 的设计。 目前,Volcano 是一个包含大约两打模块的库,总共大约有 15,000 行 C 代码。 这些模块包括文件系统、缓冲区管理、排序、B+树以及两种算法(基于排序和散列),用于自然连接、半连接、所有三个外连接、反连接、聚合、重复消除、 并集、交集、差集、反差集、关系除法。 此外,两个模块实现动态查询评估计划并允许并行处理上面列出的所有算法。

All operations on individual records are deliberately left open for later definition. Instead of inventing a language in which to specify selection predicates, hash functions,etc., functions are passed to the query processing operators to be called when necessary with the appropriate arguments.These support functions are descibed later in more detail One common and repeating theme in the design of Volcano is that it provides mechanisms for query evaluation to allow selection of and experimentation with policies. The separation of mechanisms and policies is a very well-known and well-established principle in the design and implementation of operating systems, but it has not been used as extensively and consistently in the design and implementation of database systems. It has contributed significantly to the extensibility and modularity of modem operating systems, and may make the same contribution to extensible database systems.

对单个记录的所有操作都故意保留以供以后定义。 不是发明一种语言来指定选择谓词、散列函数等,而是将函数传递给查询处理运算符,以便在必要时使用适当的参数进行调用。稍后将更详细地描述这些支持函数。 一个常见且重复的主题 Volcano 设计的一个亮点是它提供了查询评估机制,以允许选择和试验策略。 机制和策略的分离是操作系统设计和实现中众所周知且公认的原则,但它在数据库系统的设计和实现中尚未得到广泛和一致的使用。 它对现代操作系统的可扩展性和模块化做出了重大贡献,并且可能对可扩展数据库系统做出同样的贡献。

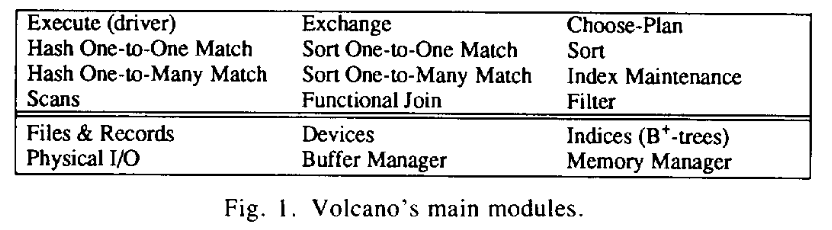

Currently, Volcano consists of two layers, the file system layer and the query processing layer. The former provides record, file, and index operations including scans with optional predicates, and buffering; the latter is a set of query processing modules that can be nested to build complex query evaluation trees. Fig. 1 identifies Volcano’s main modules. This separation can be found in most query evaluation systems, e.g., RSS and RDS in System R [9] and Core and Corona in Starburst [23]. System catalogs or a data dictionary are not included in Volano since the system was designed to be extensible and independent from any particular data model. We start our descirption at the bottom, the file system, and then discuss the query processing modules.

目前,Volcano由两层组成,文件系统层和查询处理层。 前者提供记录、文件和索引操作,包括带有可选谓词的扫描和缓冲; 后者是一组查询处理模块,可以嵌套构建复杂的查询评估树。 图 1 标识了 Volcano 的主要模块。 这种分离可以在大多数查询评估系统中找到,例如 System R [9] 中的 RSS 和 RDS 以及 Starburst [23] 中的 Core 和 Corona。 Volano 中不包含系统目录或数据字典,因为该系统被设计为可扩展且独立于任何特定数据模型。 我们从最底层的文件系统开始描述,然后讨论查询处理模块。

A. The File System

Within our discussion of the Volcano file system, we also proceed bottom-up, from buffer management to data files and indices. The existing facilities are meant to pro- vide a backbone of a query processing system, and are designed such that modifications and additions can easily be accomplished as the need arises. The buffer manager is the most interesting part of the file system. Because buffer management is performance- critical in any database system, the Volcano buffer man- ager was designed to include mechanisms that can be used most effectively and efficiently in a large variety of con- texts and with a wide array of policies. In consequence, its features include multiple buffer pools, variable-length units of buffering that are called clustres in Volcano, and replacement hints from the next higher software level.

在我们对 Volcano 文件系统的讨论中,我们也是自下而上进行的,从缓冲区管理到数据文件和索引。 现有设施旨在提供查询处理系统的骨干,其设计使得可以在需要时轻松完成修改和添加。缓冲区管理器是文件系统中最有趣的部分。 由于缓冲区管理在任何数据库系统中都对性能至关重要,因此 Volcano 缓冲区管理器被设计为包含可在各种上下文和各种策略中最有效和高效地使用的机制。 因此,它的功能包括多个缓冲池、可变长度缓冲单元(在 Volcano 中称为簇)以及来自下一个更高软件级别的替换提示。

The buffer manager’s hint facility is an excellent example of Volcano’s design principle to implement mechanisms to suppent multiple policies. The buffer manager only provides the mechanisms, i.e.,pinning, page replacement, and reading and writing disk pages, while the higher level software determines the policies depending on data semantics, importance, and access patterns. It is surprising that database buffer managers derive replace- ment decisions from observed reference behavior in spite of the fact that this behavior is generated by higher level database software and thus known and foreseeable in ad- vance within the same system, albeit different subcom- ponents.

缓冲区管理器的提示功能是 Volcano 设计原则的一个很好的例子,它实现了支持多种策略的机制。 缓冲区管理器仅提供机制,即固定、页面替换以及读写磁盘页面,而更高级别的软件根据数据语义、重要性和访问模式确定策略。 令人惊讶的是,数据库缓冲区管理器从观察到的参考行为中得出替换决策,尽管这种行为是由更高级别的数据库软件生成的,因此在同一系统内(尽管子组件不同)是已知的和可预见的。

Files are composed of records, clusters, and extents. Since file operations are invoked very frequently in any database system, all design decisions in the file module have been made to provide basic functionality with the highest attainable performance. A cluster, consisting of one or more pages, is the unit of I/O and of buffering, as discussed above. The cluster size is set for each file in- dividually. Thus, different files on the same device can have different cluster sizes. Disk space for files is allo- cated in physically contiguous extents, because extents allow very fast scanning without seeks and large-chunk read-ahead and write-behind.

文件由记录、簇和范围组成。 由于文件操作在任何数据库系统中都会非常频繁地调用,因此文件模块中的所有设计决策都是为了提供具有最高可达到性能的基本功能。 如上所述,簇由一页或多页组成,是 I/O 和缓冲的单位。 簇大小是为每个文件单独设置的。 因此,同一设备上的不同文件可以具有不同的簇大小。 文件的磁盘空间被分配在物理上连续的扩展区中,因为扩展区允许非常快速的扫描而无需查找以及大块的预读和后写。

Records are identified by a record identifier (RID), and can be accessed directly using the RID. For fast access to a large set of records, Volcano supports not only individ- ual file and record operations but also scans that support read-next and append operations. There are two interfaces to file scans; one is part of the file system and is described momentarily; the other is part of the query processing level and is described later. The first one has the standard procedures for file scans, namely open, next, close, and rewind. The next procedure returns the main memory ad- dress of the next record. This address is guaranteed (pinned) until the next operation is invoked on the scan.Thus, getting the next record within the same cluster does not require calling the buffer manager and can be per- formed very efficiently.

记录由记录标识符(RID) 标识,并且可以使用RID 直接访问。 为了快速访问大量记录,Volcano 不仅支持单个文件和记录操作,还支持支持读取下一个和追加操作的扫描。 文件扫描有两个接口; 一个是文件系统的一部分,稍后进行描述; 另一个是查询处理级别的一部分,稍后介绍。 第一个具有文件扫描的标准过程,即 open、next、close和 rewind。 next 过程返回下一条记录的主存地址。 在扫描中调用下一个操作之前,该地址得到保证(固定)。因此,获取同一簇内的下一条记录不需要调用缓冲区管理器,并且可以非常高效地执行。

For fast creation of files, scans support an append op- eration. It allocates a new record slot, and returns the new slot’s main memory address. It is the caller’s responsibil- ity to fill the provided record space with useful information, i.e., the append rourine is entirely oblivious to the data and their representation.

为了快速创建文件,扫描支持追加操作。 它分配一个新的记录槽,并返回新槽的主内存地址。 调用者有责任用有用的信息填充提供的记录空间,即附加例程完全不关心数据及其表示。

Scans also support optional predicates. The predicate function is called by the next procedure with the argument and a record address. Selective scans are the first example of support functions mentioned briefly in the introduction. Instead of determining a qualification itself, the scan mechanism relies on a predicate function imported from a higher level.

扫描还支持可选谓词。 谓词函数由下一个过程使用参数和记录地址调用。 选择性扫描是简介中简要提到的支持功能的第一个示例。 扫描机制不是确定限定本身,而是依赖于从更高级别导入的谓词函数。

Support functions are passed to an operation as a function entry point and a typeless pointer that serves as a predicate argument. Arguments to support functions can be used in two ways, namely in compiled and interpreted query execution. In compiled scans, i.e., when the pred- icate evaluation function is available in macvhine code, the argument can be used to pass a constant or a pointer to several constants to the predicate function. For exam- ple, if the predicate consists of comparing a record field with a string, the comparison function is passed as pred- icate function while the search string is passed as predi- cate argument. In interpreted scans, i.e., when a general interpreter is used to evaluate all predicates in query, they can be used to pass appropriate code to the interpreter. The interpreter’s entry point is given as predicate func- tion. Thus, both interpreted and compiled scans are sup- ported with a single simple and efficient mechanism. Vol- cano’s use of support functions and their arguments is another example for a mechanism that leaves a policy de- cision, in this case whether to use compiled or interpreted scans, open to be decided by higher level software.

支持函数作为函数入口点和充当谓词参数的无类型指针传递给操作。 支持函数的参数可以通过两种方式使用,即在编译和解释查询执行中。 在编译扫描中,即当谓词求值函数在 macvhine 代码中可用时,参数可用于将常量或指向多个常量的指针传递给谓词函数。 例如,如果谓词包括将记录字段与字符串进行比较,则比较函数作为谓词函数传递,而搜索字符串作为谓词参数传递。 在解释扫描中,即当使用通用解释器来评估查询中的所有谓词时,它们可用于将适当的代码传递给解释器。 解释器的入口点作为谓词函数给出。 因此,解释扫描和编译扫描都由一个简单而有效的机制支持。 Volcano 对支持函数及其参数的使用是一种机制的另一个例子,该机制将策略决策(在本例中是使用编译扫描还是解释扫描)由更高级别的软件决定。

indices are implemented currently only in the form of B + -trees with an interface similar to files. A leaf entry consists of a key and information. The information part typically is a RID, but it could include more or different information. The key and the information can be of any type; a comparison function must be provided to compare keys. The comparison function uses an argument equiv- alent to the one described for scan predicates. Permitting any information in the leaves gives more choices in phys- ical database design. It is another example of Volcano providing a mechanism to allow a multitude of designs and usage policies. B + -trees support scans similar to files, including predicates and append operations for fast loading In addition, B+ -tree scans allow seeking to a partic- ular key, and setting lower and upper bounds.

索引目前仅以 B+ 树的形式实现,其接口类似于文件。 叶条目由密钥和信息组成。 信息部分通常是 RID,但它可以包括更多或不同的信息。 密钥和信息可以是任何类型; 必须提供比较函数来比较键。 比较函数使用的参数与扫描谓词所描述的参数等效。 允许叶子中存在任何信息可以为物理数据库设计提供更多选择。 这是 Volcano 提供允许多种设计和使用策略的机制的另一个例子。 B+ 树支持与文件类似的扫描,包括用于快速加载的谓词和附加操作。此外,B+ 树扫描允许查找特定键,并设置下限和上限。

For intermediate results in query processing (later called streams), Volcano uses special devices called virtual de- vices. The difference between virtual and disk devices is that data pages of virtual devices only exist in the buffer. As soon as such data pages are unpinned, they disappear and their contents are lost. Thus, Volcano uses the same mechanisms and function calls for permanent and inter- mediate data sets, easing implementation of new opera- tors significantly.

对于查询处理中的中间结果(后来称为流),Volcano 使用称为虚拟设备的特殊设备。 虚拟设备和磁盘设备的区别在于虚拟设备的数据页只存在于缓冲区中。 一旦此类数据页被取消固定,它们就会消失并且其内容也会丢失。 因此,Volcano 对永久和中间数据集使用相同的机制和函数调用,从而显着简化了新运算符的实现。

In summary, much of Volcano’s file system is conven- tional in its goals but implemented in a flexible, efficient, and compact way. The file system supports basic abstractions and operations, namely devices, files, records,B+-trees, and scans. It provides mechanisms to access these objects, leaving many policy decisions to higher level software. High performance was a very important goal in the design and implementation of these mecha- nisms since performance studies and parallelization only make sense if the underlying mechanisms are efficient. Furthermore, research into implementation and perfor- mance trade-offs for extensible database systems and new data models is only relevant if an efficient evaluation platform is used.

总之,Volcano 的文件系统的大部分目标都是传统的,但以灵活、高效和紧凑的方式实现。 文件系统支持基本的抽象和操作,即设备、文件、记录、B+树和扫描。 它提供了访问这些对象的机制,将许多策略决策留给更高级别的软件。 高性能是这些机制的设计和实现中的一个非常重要的目标,因为只有当底层机制高效时,性能研究和并行化才有意义。 此外,只有使用有效的评估平台,对可扩展数据库系统和新数据模型的实施和性能权衡的研究才有意义。

B. Query Processing

The file system routines described above are utilized by the query processing routines to evaluate complex quer- ies. Queries are expressed as query plans or algebra expressions; the operators of this algebra are query pro- cessing algorithms and we call the algebra an executable algebra as opposed to logical algebras, ‘e .g . , relational algebra. We will describe the operations using relational terminology hoping that this will assist the reader.We must point out, however, that the operations can be viewed and are implemented as operations on sets of objects, and that Volcano does not depend on assumptions about the internal structure of such objects. In fact, we intend to use Volcano for query processing in an object- oriented database system [ 151. The key to this use of Vol- cano is that set processing and interpretation of data items are separated.

查询处理例程利用上述文件系统例程来评估复杂的查询。 查询被表示为查询计划或代数表达式; 该代数的运算符是查询处理算法,我们将该代数称为可执行代数,而不是逻辑代数,“例如” ,关系代数。 我们将使用关系术语来描述这些操作,希望这会对读者有所帮助。但是,我们必须指出,这些操作可以被视为并实现为对对象集的操作,并且 Volcano 不依赖于有关内部对象的假设。 此类对象的结构。 事实上,我们打算在面向对象的数据库系统中使用 Volcano 进行查询处理[151。使用 Volcano 的关键在于数据项的集合处理和解释是分离的。

In Volcano, all algebra operators are implemented as iterators, i.e., they support a simple open-next-close pro- tocol. Basically, iterators provide the iteration component of a loop, i.e., initialization, increment, loop termination condition, and final housekeeping. These functions allow “iteration’ ’ over the results of any operation similar to the iteration over the result of a conventional file scan.Associated with each iterator is a state record type. A state record contains arguments, e.g., the size of a hash table to be allocated in the open procedure, and state, e.g., the location of a hash table. All state information of an iterator is kept in its state record and there are no ‘ ‘static’ ’ variables; thus, an algorithm may be used multiple times in a query by including more than one state record in the query.

在 Volcano 中,所有代数运算符都被实现为迭代器,即它们支持简单的 open-next-close 协议。 基本上,迭代器提供循环的迭代组件,即初始化、增量、循环终止条件和最终内务处理。 这些函数允许对任何操作的结果进行“迭代”,类似于对传统文件扫描的结果进行迭代。与每个迭代器相关联的是一个状态记录类型。 状态记录包含参数(例如要在打开过程中分配的哈希表的大小)和状态(例如哈希表的位置)。 迭代器的所有状态信息都保存在其状态记录中,并且不存在“静态”变量; 因此,通过在查询中包括多于一个状态记录,可以在查询中多次使用算法。

All manipulation and interpretation of data objects,e.g., comparisons and hashing, is passed to the iterators by means of pointers to the entry points of appropriate support functions. Each of these support functions uses an argument allowing interpreted or compiled query eval- uation, as described earlier for file scan predicates. With- out the support functions, Volcano’s iterators are empty algorithm shells that cannot perform any useful work. In effect, the split into algorithm shells and support functions separates control and iteration over sets from interpreta- tion of records or objects. This separation is one of the cornerstones’ of Volcano’s data model independent and extensibility, which will be discussed in Section IV.

数据对象的所有操作和解释,例如比较和散列,都通过指向适当支持函数入口点的指针传递给迭代器。 这些支持函数中的每一个都使用一个允许解释或编译查询评估的参数,如前面针对文件扫描谓词所述。 如果没有支持函数,Volcano 的迭代器就是空的算法外壳,无法执行任何有用的工作。 实际上,分成算法外壳和支持函数将集合的控制和迭代与记录或对象的解释分开。 这种分离是 Volcano 数据模型独立性和可扩展性的基石之一,这将在第四节中讨论。

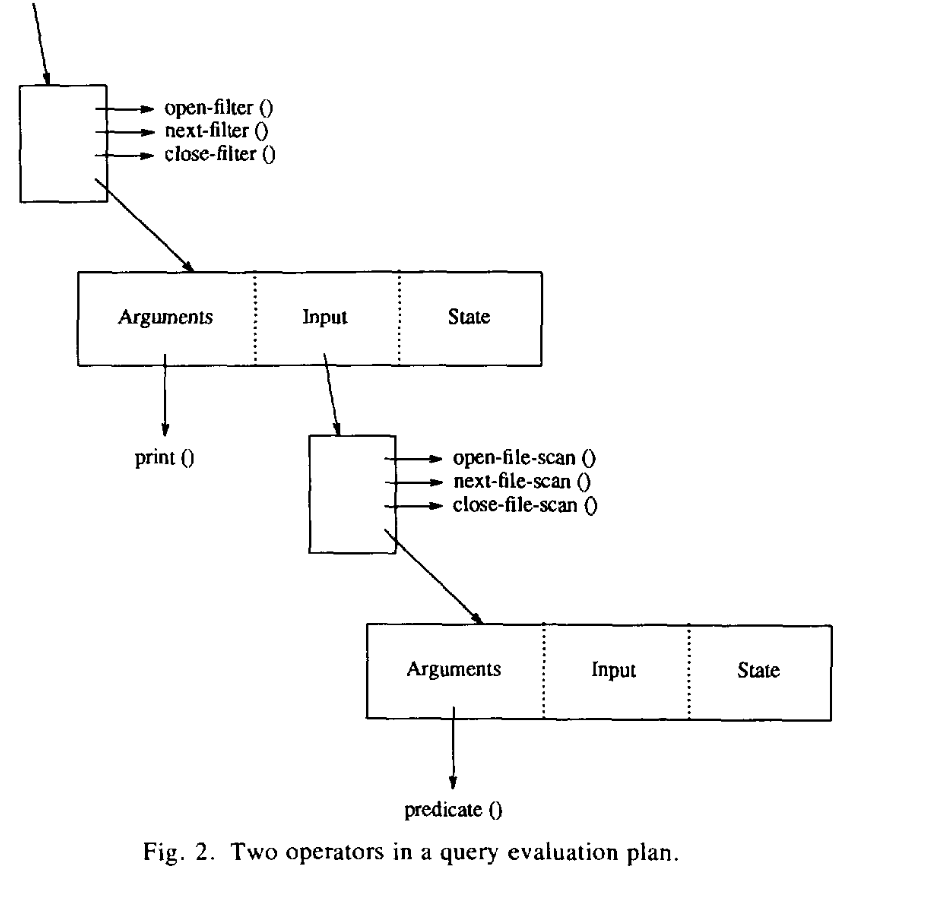

Iterators can be nested and then operate similarly to coroutines. State records are linked together by means of input pointers. The input pointers are also kept in the state records. Fig. 2 shows two operators in a query evaluation plan. Purpose and capabilities of the Jilter operator will be discussed shortly; one of its possible functions is to print items of a stream using a function passed to the filter operator as one of its arguments. The structure at the top gives access to the functions as well as to the state record. Using a pointer to this structure, the filter functions can be called and their local state can be passed to them as a procedure argument. The functions themselves, e.g., open–filter, can use the input pointer contained in the state record to invoke the input operator’s functions. Thus, the filter functions can invoke the file scan functions as needed, and can pace the file scan according to the needs of the filter. In other words, Fig. 2 shows a complete query evaluation plan that prints selected records from a file.

迭代器可以嵌套,然后与协程类似地进行操作。 状态记录通过输入指针链接在一起。 输入指针也保存在状态记录中。 图 2 显示了查询评估计划中的两个运算符。 Jilter 操作员的目的和功能将很快讨论; 它的可能功能之一是使用传递给过滤器运算符作为其参数之一的函数来打印流的项目。 顶部的结构可以访问函数以及状态记录。 使用指向该结构的指针,可以调用过滤器函数,并且可以将它们的本地状态作为过程参数传递给它们。 函数本身(例如 open–filter)可以使用状态记录中包含的输入指针来调用输入运算符的函数。 因此,过滤器函数可以根据需要调用文件扫描函数,并且可以根据过滤器的需要调整文件扫描的速度。 换句话说,图 2 显示了一个完整的查询评估计划,该计划打印从文件中选择的记录。

Using Volcano’s standard form of iterators, an operator does not need to know what kind of operator produces its input, or whether its input comes from a complex query tree or from a simple file scan. We call this concept anon- ymous inputs or streams. Streams are a simple but pow- erful abstraction that allows combining any number and any kind of operators to evaluate a complex query, a sec- ond cornerstone to Volcano’s extensibility. Together with the iterator control paradigm, streams represent the most efficient execution model in terms of time (overhead for synchronizing operators) and space (number of records that must reside in memory concurrently) for single-pro- cess query evaluation.

使用 Volcano 的标准形式的迭代器,操作符不需要知道哪种操作符产生其输入,或者其输入是来自复杂的查询树还是来自简单的文件扫描。 我们将这个概念称为匿名输入或流。 流是一种简单但功能强大的抽象,它允许组合任意数量和任意类型的运算符来评估复杂的查询,这是 Volcano 可扩展性的第二个基石。 与迭代器控制范例一起,流代表了单进程查询评估的时间(同步运算符的开销)和空间(必须同时驻留在内存中的记录数量)方面最有效的执行模型。

Calling open for the top-most operator results in instan- tiations for the associated state record’s state, e.g., allo- cation of a hash table, and in open calls for all inputs. In this way, all iterators in a query are initiated recursively. In order to process the query, next for the top-most op- erator is called repeatedly until it fails with an end-of- stream indicator. The top-most operator calls the next procedure of its input if it needs more input data to pro- duce an output record. Finally, the close call recursively “shuts down” all iterators in the query. This model of query execution matches very closely the ones being in- eluded in the E database implementation language in EX- ODUS and the query executor of the Starburst relational database system.

对最顶层运算符调用 open 会导致关联状态记录的状态实例化,例如哈希表的分配,以及对所有输入的 open 调用。 这样,查询中的所有迭代器都会递归启动。 为了处理查询,重复调用最顶层运算符的 next ,直到它失败并出现流结束指示符。 如果需要更多输入数据来生成输出记录,最顶层的运算符将调用其输入的下一个过程。 最后,关闭调用递归地“关闭”查询中的所有迭代器。 这种查询执行模型与 EXODUS 中的 E 数据库实现语言和 Starburst 关系数据库系统的查询执行器中包含的模型非常匹配。

A number of query and environment parameters may nfluence policy decisions during opening a query evaluation plan, e.g., query predicate constants and system load information. Such parameters are passed between all open procedures in Volcano with a parameter called bindings. This is a typeless pointer that can be used to pass infor- mation for policy decisions. Such policy decisions are im- plemented using support functions again. For example, the module implementing hash join allows dynamic de- termination of the size of a hash table-another example of the separation of mechanism and policy. This bindings parameter is particularly useful in dynamic query evalu- ation plans, which will be discussed later in Section V.

许多查询和环境参数可能会影响打开查询评估计划期间的策略决策,例如查询谓词常量和系统负载信息。 此类参数通过称为绑定的参数在 Volcano 中的所有打开过程之间传递。 这是一个无类型指针,可用于传递策略决策信息。 此类政策决策再次使用支持功能来实施。 例如,实现散列连接的模块允许动态确定散列表的大小——机制和策略分离的另一个例子。 这个绑定参数在动态查询评估计划中特别有用,稍后将在第五节中讨论。

The tree-structured query evaluation plan is used to ex- ecute queries by demand-driven dataflow. The return value of a next operation is, besides a status indicator, a structure called Next-Record, which consists of an RID and a record address in the buffer pool. This record is pinned in the buffer. The protocol about fixing and unfixng records is as follows. Each record pinned in the buffer is owned by exactly one operator at any point in time.After receiving a record, the operator can hold on to it for a while, e.g., in a hash table, unfix it, e.g., when a pred- icate fails, or pass it on to the next operator. Complex operations that create new records, e.g., join, have to fix their output records in the buffer before passing them on, and have to unfix their input records. Since this could re- sult in a large number of buffer calls (one per record in each operator in the query), the interface to the buffer manager was recently redesigned such that it will require a total of two buffer calls per cluster on the procedure side (e. g., a file scan) independently of how many records a cluster contains, and only one buffer call per cluster on the consumer side.

树结构的查询评估计划用于通过需求驱动的数据流执行查询。 下一个操作的返回值除了状态指示符之外,还有一个名为Next-Record的结构,它由RID和缓冲池中的记录地址组成。 该记录被固定在缓冲区中。 关于修复和取消修复记录的协议如下。 固定在缓冲区中的每条记录在任何时间点都由一个操作员拥有。收到一条记录后,操作员可以将其保留一段时间(例如,在哈希表中),然后取消固定它,例如,当预测时 icate 失败,或者将其传递给下一个操作员。 创建新记录的复杂操作(例如连接)必须在传递它们之前修复缓冲区中的输出记录,并且必须取消修复它们的输入记录。 由于这可能会导致大量的缓冲区调用(查询中每个运算符中的每个记录一个),因此最近重新设计了缓冲区管理器的接口,使得过程中的每个集群总共需要两次缓冲区调用 端(例如文件扫描)独立于集群包含多少条记录,并且在消费者端每个集群只有一个缓冲区调用。

A Next-Record structure can point to one record only. All currently implemented query processing algorithms pass complete records between operators, e.g., join creates new, complete records by copying fields from two input records. It can be argued that creating complete new records and passing them between operators is prohibitively expensive. An alternative is to leave original records in the buffer as they were retrieved from the stored data, and compose Next-Record pairs, triples, etc., as intermediate results. Although this alternative results in less memory-to-memory copying, it is not implemented explicitly because Volcano already provides the necessary mechanisms, namely the Biter iterator (see next subsec- tion) that can replace each record in a stream by an RID- pointer pair or vice versa.

下一条记录结构只能指向一条记录。 当前实现的所有查询处理算法都在运算符之间传递完整的记录,例如,连接通过复制两个输入记录中的字段来创建新的完整记录。 可以说,创建完整的新记录并在运营商之间传递它们的成本过高。 另一种方法是将原始记录保留在缓冲区中,因为它们是从存储的数据中检索的,并组合下一个记录对、三元组等作为中间结果。 虽然这种替代方案会减少内存到内存的复制,但它并没有明确实现,因为 Volcano 已经提供了必要的机制,即 Biter 迭代器(参见下一小节),它可以用 RID 指针替换流中的每个记录 配对或反之亦然。

In summary, demand-driven dataflow is implemented by encoding operators as iterators, i.e., with open, next, and close procedures, since this scheme promises gener- ality , extensibility, efficiency, and low overhead. The next few sections describe some of Volcano’s existing iterators in more detail. In very few modules, the described operators provide much of the functionality of other query evaluation systems through generality and separation of mechanisms and policies. Furthermore, the separation of set processing control (iteration) from item interpretation and manipulation provides this functionality independently from any data model.

总之,需求驱动的数据流是通过将运算符编码为迭代器来实现的,即使用 open、next 和 close 过程,因为该方案具有通用性、可扩展性、高效性和低开销。 接下来的几节将更详细地描述 Volcano 的一些现有迭代器。 在极少数模块中,所描述的运算符通过机制和策略的通用性和分离性提供了其他查询评估系统的许多功能。 此外,集合处理控制(迭代)与项目解释和操作的分离提供了独立于任何数据模型的功能。

Scans, Functional Join, and Filter: The first scan interface was discussed with the file system. The second interface to scans, both file scans and B+-tree scans, pro- vides an iterator interface suitable for query processing. The open procedures open the file or B+-tree and initiate a scan using the scan procedures of the file system level. The file name or closed file descriptor are given in the state record as are an optional predicate and bounds for B+-tree scans. Thus, the two scan interfaces are function- ally equivalent. Their difference is that the file system scan interface is used by various internal modules, e.g., by the device module for the device table of contents, while the iterator interface is used to provide leaf operators for query evaluation plans.

扫描、功能连接和过滤:第一个扫描接口是与文件系统一起讨论的。 第二个扫描接口(文件扫描和 B+ 树扫描)提供了适合查询处理的迭代器接口。 打开过程打开文件或 B+ 树并使用文件系统级别的扫描过程启动扫描。 文件名或关闭文件描述符在状态记录中给出,作为 B+ 树扫描的可选谓词和边界。 因此,两个扫描接口在功能上是等效的。 它们的区别在于,文件系统扫描接口由各种内部模块使用,例如,由用于设备内容表的设备模块使用,而迭代器接口用于为查询评估计划提供叶运算符。

Typically, Bf-tree indices hold keys and RID’s in their leaves. In order to use B+-tree indices, the records in the data file must be retrieved. In Volcano, this look-up op- eration is split from the B+-tree scan iterator and is performed by the functional join operator. This operator re- quires a stream of records containing RID’s as input and either outputs the records retrieved using the RID’s or it composes new records from the input records and the re- trieved records, thus “joining” the B+-tree entries and their corresponding data records.

通常,B+ 树索引在其叶子中保存键和 RID。 为了使用 B+ 树索引,必须检索数据文件中的记录。 在 Volcano 中,此查找操作从 B+ 树扫描迭代器中分离出来,并由函数连接运算符执行。 该运算符需要包含 RID 的记录流作为输入,并且输出使用 RID 检索到的记录,或者从输入记录和检索到的记录组成新记录,从而“连接”B+ 树条目及其对应的记录。 数据记录。

B+-tree scan and functional join are separated for a number of reasons. First, it is not clear that storing data in B+-tree leaves never is a good idea. At times, it may be desirable to experiment with having other types of in- formation associated with look-up keys. Second, this sep- aration allows experimentation with manipulation of RID- lists for complex queries. Third, while functional join is currently implemented rather naively, this operation can be made more intelligent to assemble complex objects recursively. In summary, separating index search and record retrieval is another example for porviding mechanisms in Volcano to allow for experiments with policies, a design principle employed to ensure that the Volcano software would be flexible and extensible.

由于多种原因,B+ 树扫描和功能连接是分开的。 首先,目前尚不清楚将数据存储在 B+ 树叶子中永远不是一个好主意。 有时,可能需要尝试使用与查找键相关联的其他类型的信息。 其次,这种分离允许对复杂查询的 RID 列表进行实验操作。 第三,虽然函数式连接目前的实现相当简单,但可以使该操作更加智能,以递归地组装复杂对象。 总之,分离索引搜索和记录检索是 Volcano 中提供允许进行策略实验的机制的另一个例子,这是用于确保 Volcano 软件灵活且可扩展的设计原则.

The filter opeartor used in the example above performs three functions, depeding on presence or absence of corresponding support functions in the state record. The predicate function apples a selection predicate.e.g., to implement bit vector filtering. The transform function creates a new record,typically of a new type, from each old record.An example would be a relational projection (without duplicate elimination). More complex examples include compression and decompression, other changes in codes and representations, and reducing a stream of rec- ords to RID-pointer pairs. Finally, the apply function is invoked once on each record for the venefit of its side effects. Typical examples are updates and printing. Notice that updates are done within streams and query evaluation plans. Thus, Volcano plans are not only retrieval but also update plans. The filter operator is also called the ‘ ‘side-effect operator. ’ ’ Another example is creating a fil- ter for bit vector filtering. In other words, the filter op- erator is a very versatile single-input single-output oper- ator that can be used for a variety of purposes. Bit vector filtering is an example for a special version of separationof policy and mechanism, namely the rule not to provide an operation that can be composed easily and efficiently using existing operations.

上面示例中使用的过滤器运算符执行三个功能,具体取决于状态记录中是否存在相应的支持功能。 谓词函数应用选择谓词,例如,实现位向量过滤。 变换函数从每个旧记录创建一个新记录,通常是新类型。一个例子是关系投影(没有重复消除)。 更复杂的示例包括压缩和解压缩、代码和表示的其他更改以及将记录流减少为 RID 指针对。 最后,apply 函数在每条记录上调用一次,以消除其副作用。 典型的例子是更新和打印。 请注意,更新是在流和查询评估计划内完成的。 因此,Volcano计划不仅是检索计划,而且是更新计划。 过滤运算符也称为“副作用运算符”。 ” 另一个例子是创建一个用于位向量过滤的过滤器。 换句话说,过滤器运算符是一种非常通用的单输入单输出运算符,可用于多种目的。 位向量过滤是策略和机制分离的特殊版本的示例,即不提供可以使用现有操作轻松且高效地组合的操作的规则。

One-to-One Match: Together with the filter opera- tor, the one-to-one match operator will probably be among the most frequently used query processing operators in Volcano as it implements a variety of set-matching func- tions. In a single operator, it realizes join, semi-join, outer joint, anti-joint, intersection, union, difference, anti-dif- ference, aggregation, and duplicate elimination. The one- to-one match operator is a physical operator like sort, i.e., part of the executable algebra, not a logical operator like the operators of relational algebra. It is the operator that implements all operations in which an item is included in the output depending on the result of a comparison be- tween a pair of items.

一对一匹配:与过滤运算符一起,一对一匹配运算符可能是 Volcano 中最常用的查询处理运算符之一,因为它实现了各种集合匹配功能。 在单个算子中,它实现了连接、半连接、外连接、反连接、交集、并集、差分、反差分、聚合和重复消除。 一对一匹配运算符是像排序这样的物理运算符,即可执行代数的一部分,而不是像关系代数运算符那样的逻辑运算符。 该运算符实现所有操作,其中根据一对项目之间的比较结果将项目包含在输出中。

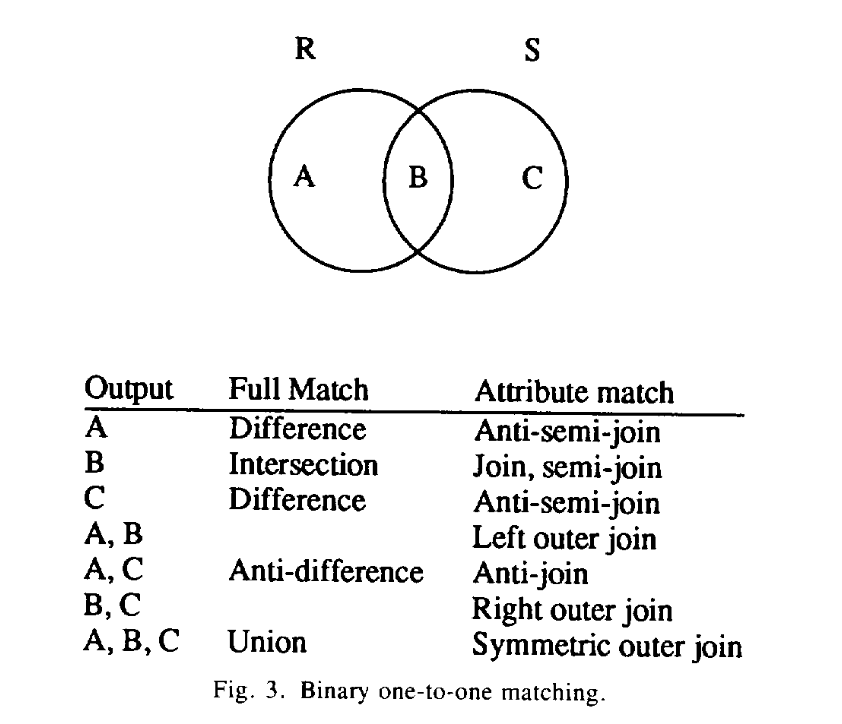

Fig. 3 shows the basic principle underlying the one-to- one match operator for binary operations, namely sepa- ration of the matching and non-matching components of two sets, called R and S in the Fig. 3, and producing ap- propriate subsets, possibly after some transformation and combination as in the case of a join. Since all these operations require basically the same steps, it was logical to mplement them in one general and efficient module. The main difference between the unary and binary operations, e.g., aggregate functions and equi-join, is that the former require comparing items of the same input while the latter require comparing items of two different inputs.

图 3 显示了二元运算的一对一匹配运算符的基本原理,即分离两个集合的匹配和不匹配分量(在图 3 中称为 R 和 S),并生成 ap- 适当的子集,可能是在一些转换和组合之后,如连接的情况。 由于所有这些操作都需要基本相同的步骤,因此在一个通用且高效的模块中实现它们是合乎逻辑的。 一元和二元运算(例如聚合函数和等连接)之间的主要区别在于,前者需要比较相同输入的项目,而后者需要比较两个不同输入的项目。

Since the implementation of Volcano’s one-to-one match is data-model-independent and all operations on data items are imported via support functions, the module s not restricted to the relational model but can perform set matching functions for arbitrary data types. Furthermore, the hash-based version provides recursive hash table overflow avoidance [121and resolution similar to hybrid hash join [31] and can therefore handle very large nput sizes. The sort-based version of one-to-one match is based on an external sort operator and can also operate on arbitrarily large inputs.

由于Volcano的一对一匹配的实现是与数据模型无关的,并且对数据项的所有操作都是通过支持函数导入的,因此该模块不限于关系模型,而是可以对任意数据类型执行集合匹配函数。 此外,基于散列的版本提供了递归散列表溢出避免[121]和类似于混合散列连接[31]的解决方案,因此可以处理非常大的输入大小。 一对一匹配的基于排序的版本基于外部排序运算符,并且还可以对任意大的输入进行操作。

While there seems to be an abundance of join algo- rithms, our design goals of extensibility and limited sys- tem size led to the choice of only two algorithms (at the current time) to be implemented in Volcano, namely merge join and hybrid hash join. This choice will also allow experimental research into the duality and trade-offs between sort- and hash-based query processing algo- rithms.

虽然连接算法似乎很丰富,但我们的可扩展性和有限系统规模的设计目标导致我们只选择了两种算法(目前)在 Volcano 中实现,即合并连接和混合哈希 加入。 这种选择还允许对基于排序和基于散列的查询处理算法之间的二元性和权衡进行实验研究。

The classic hashjoin algorithm (which is the in-mem- ory component of hybrid hash join) proceeds in two phases. In the first phase, a hash table is built from one input; it is therefore called the build phase. In the second phase, the hash table is probed using tuples from the other input to determine matches and to compose output tuples; it is called the probe phase. After the probe phase, the hash table and its entries are discarded. Instead, our one- to-one match operator uses a third phase called thejush phase, which is needed for aggregate functions and some other operations.

经典的哈希连接算法(混合哈希连接的内存组件)分两个阶段进行。 在第一阶段,根据一个输入构建哈希表; 因此,它被称为构建阶段。 在第二阶段,使用来自其他输入的元组来探测哈希表以确定匹配并组成输出元组; 它被称为探测阶段。 探测阶段结束后,哈希表及其条目将被丢弃。 相反,我们的一对一匹配运算符使用称为 thejush 阶段的第三阶段,这是聚合函数和其他一些操作所需要的。

Since the one-to-one match operator is an interator like all Volcano operators, the three phases are assigned to the open, next, and close functions. Open includes the build phase, while the other two phases are included in the next function. Successive invocations of the next function au- tomatically switch from the probe phase to the flush phase when the second input is exhausted.

由于一对一匹配运算符像所有 Volcano 运算符一样是一个迭代器,因此三个阶段被分配给 open、next 和 close 函数。 Open 包含构建阶段,而其他两个阶段包含在 next 函数中。 当第二个输入耗尽时,连续调用下一个函数会自动从探测阶段切换到刷新阶段。

The build phase can be used to eliminate duplicates or to perform an aggregate function in the build input. The one-to-one match module does not require a probe input; if only an aggregation is required without subsequent join, the absence of the probe input in the state record signals to the module that the probe phase should be skipped. For aggregation, instead of inserting a new tuple into the hash table as in the classic hash join, an input tuple is first matched with the tuples in its prospective hash bucket. If a match is found, the new tuple is discarded or its values are aggregated into the existing tuple.

构建阶段可用于消除重复项或在构建输入中执行聚合函数。 一对一匹配模块不需要探针输入; 如果仅需要聚合而无需后续连接,则状态记录中缺少探测输入会向模块发出应跳过探测阶段的信号。 对于聚合,不是像经典哈希连接那样将新元组插入到哈希表中,而是首先将输入元组与其预期哈希桶中的元组进行匹配。 如果找到匹配项,则丢弃新元组或将其值聚合到现有元组中。

While hash tables in main memory are usually quite fast, a severe problem occurs if the build input does not fit in main memory. This situation is called hash table overjlow. There are two ways to deal with hash table over- flow. First, if a query optimizer is used and can anticipate overflow, it can be avoided by partitioning the input(s). This overjlow avoidance technique is the basis for the hash join algorithm used in the Grace database machine [121. Second, overflow files can be created using overfrow res- olution after the problem occurs.

虽然主内存中的哈希表通常非常快,但如果构建输入不适合主内存,则会出现严重问题。 这种情况称为哈希表溢出。 有两种方法可以处理哈希表溢出。 首先,如果使用查询优化器并且可以预见溢出,则可以通过对输入进行分区来避免溢出。 这种过度避免技术是 Grace 数据库机中使用的哈希连接算法的基础 [121。 其次,可以在问题发生后使用溢出解决方案创建溢出文件。

For Volcano’s one-to-one match, we have adopted hy- brid hash join. Compared to the hybrid hash algorithm used in GAMMA, our overflow resolution scheme has several improvements. Items can be inserted into the hash table without copying, i.e., the hash table points directly to records in the buffer as produced by one-to-one match’s build input. If input items are not densely packed, how- ever, the available buffer memory can fill up very quickly. Therefore, the one-to-one match operator has an argu- ment called the packing threshold. When the number of items in the hash table reaches this threshold, items are packed densely into overflow files. However, the clusters (pages) of these overflow files are not yet unfixed in the buffer, i.e., no t / O is performed as yet. Only when the number of items in the hash table reaches a second thresh- old called spilling threshold is the first of the partition files unfixed. The clusters of this file are written to disk and the count of items in the hash table accordingly re- duced. When this number reaches the spilling threshold again, the next partition is unfixed, etc. If necessary, par- titioning is performed recursively, with automatically ad- justed packing and spilling thresholds. The unused por- tions of the hash table, i.e., the portions corresponding to spilled buckets, are used for bit vector filtering to save I/O to overflow files.

对于 Volcano 的一对一匹配,我们采用了混合哈希连接。 与 GAMMA 中使用的混合哈希算法相比,我们的溢出解决方案有一些改进。 可以将项目插入到哈希表中而无需复制,即哈希表直接指向由一对一匹配的构建输入生成的缓冲区中的记录。 然而,如果输入项不是密集排列的,则可用缓冲存储器很快就会被填满。 因此,一对一匹配运算符有一个称为打包阈值的参数。 当哈希表中的项目数量达到此阈值时,项目将被密集地打包到溢出文件中。 然而,这些溢出文件的簇(页)在缓冲区中尚未被固定,即尚未执行t/O。 仅当哈希表中的项目数达到称为溢出阈值的第二个阈值时,第一个分区文件才会被取消修复。 该文件的簇被写入磁盘,哈希表中的项目数相应减少。 当这个数字再次达到溢出阈值时,下一个分区将不固定,等等。如果有必要,分区会递归执行,并自动调整打包和溢出阈值。 哈希表的未使用部分,即与溢出桶相对应的部分,用于位向量过滤,以节省溢出文件的 I/O。

The fan-out of the first partitioning step is determined by the total available memory minus the memory required to reach the packing threshold. By choosing the packing and spilling thresholds, a query optimizer can avoid re- cord copying entirely for small build inputs, specify overflow avoidance (and the maximum fan-out) for very large build inputs, or determine packing and spilling thresholds based on the expected build input size. In fact, because the input sizes cannot be estimated precisely if the inputs are produced by moderately complex expres- sions, the optimizer can adjust packing and spilling thresholds based on the esimated probability distributions of input sizes. For example, if overflow is very unlikely, it might be best to set the packing threshold quite high such that, with high probability, the operation can pro- ceed without copying. On the other hand, if overflow is more likely, the packing threshold should be set lower to obtain a larger partitioning fan-out.

第一个分区步骤的扇出由总可用内存减去达到打包阈值所需的内存确定。 通过选择打包和溢出阈值,查询优化器可以完全避免小型构建输入的记录复制,为非常大的构建输入指定溢出避免(和最大扇出),或者根据预期确定打包和溢出阈值 构建输入大小。 事实上,由于如果输入是由中等复杂的表达式生成的,则无法精确估计输入大小,因此优化器可以根据输入大小的估计概率分布来调整打包和溢出阈值。 例如,如果溢出的可能性很小,则最好将打包阈值设置得相当高,以便很有可能操作可以继续进行而无需复制。 另一方面,如果溢出的可能性更大,则应将打包阈值设置得较低以获得更大的分区扇出。

The initial packing and spilling thresholds can be set to zero; in that case, Volcano’s one-to-one match performs overflow avoidance very similar to the join algorithm used in the Grace database machine. Beyond this parameter- ization of overflow avoidance and resolution, Volcano’s one-to-one match algorithm also permits optimizations of cluster size and recursion depth similar to the ones used for sorting [4], [21] and for nonuniform hash value dis- tributions, and it can operate on inputs with variable- length records.

初始打包和溢出阈值可以设置为零; 在这种情况下,Volcano 的一对一匹配执行溢出避免,与 Grace 数据库机器中使用的连接算法非常相似。 除了溢出避免和解析的参数化之外,Volcano 的一对一匹配算法还允许优化簇大小和递归深度,类似于用于排序 [4]、[21] 和非均匀哈希值分布的优化 ,并且它可以对具有可变长度记录的输入进行操作。

The extension of the module described so far to set op- erations started with the observation that the intersection of two union-compatible relations is the same as the nat- ural join of these relations, and can be best implemented as semi-join. The union is the (double-sided) outer join of union-compatible relations. Difference and anti-differ- ence of two sets can be computed using special settings of the algorithm’s bells and whistles. Finally, a Cartesian product can be implemented by matching successfully all possible pairs of items from the two inputs.

到目前为止描述的模块对设置操作的扩展始于观察到两个并集兼容关系的交集与这些关系的自然连接相同,并且可以最好地实现为半连接。 联合是联合兼容关系的(双面)外部联接。 可以使用算法花哨的特殊设置来计算两个集合的差异和反差异。 最后,可以通过成功匹配两个输入中所有可能的项目对来实现笛卡尔积。

A second version of one-to-one match is based on sort- ing. Its two modules are a disk-based merge-sort and the actual merge-join. Merge-join has been generalized sim- ilarly to hash-join to support semi-join, outer join, anti-join, and set operations. The sort operator has been im- plemented in such a way that it uses and provides the it- erator interface. Opening the sort iterator prepares sorted runs for merging. If the number of runs is larger than the maximal fan-in, runs are merged into larger runs until the remaining runs can be merged in a single step. The final merge is performed on demand by the next function. If the entire input fits into the sort buffer, it is kept there until demanded by the next function. The sort operator also supports aggregation and duplicate elimination. It can perform these operations early, i.e., while writing tem- porary files [2]. The sort algorithm is described and eval- uated in detail in [2****11.

一对一匹配的第二个版本基于排序。 它的两个模块是基于磁盘的合并排序和实际的合并连接。 合并连接已被推广为类似于散列连接,以支持半连接、外连接、反连接和集合操作。 排序运算符的实现方式是使用并提供迭代器接口。 打开排序迭代器为合并排序运行做好准备。 如果运行数量大于最大扇入,运行将合并为更大的运行,直到可以在单个步骤中合并剩余运行。 最终合并由下一个函数按需执行。 如果整个输入适合排序缓冲区,则它将保留在那里,直到下一个函数需要为止。 排序运算符还支持聚合和重复消除。 它可以尽早执行这些操作,即在写入临时文件时[2]。 排序算法在[211.

In summary, Volcano’s one-to-one match operators are very powerful parts of Volcano’s query execution alge- bra. By separating the control required to operate on sets of items and the interpretation and manipulation of indi- vidual items it can perform a variety of set matching tasks frequently used in database query processing, and can perform these tasks for arbitrary data types and data models. The separation of mechanisms and policies for overflow management supports overflow avoidance as well as hybrid hash overflow resolution, both recursively if required. Implementing sort- and hash-based algo- rithms in a comparable fashion will allow meaningful ex- perimental research into the duality and trade-offs be- tween sort- and hash-based query processing algorithms. The iterator interface guarantees that the one-to-one match operator can easily be combined with other operations, including new iterators yet to be designed.

总之,Volcano 的一对一匹配运算符是 Volcano 查询执行代数中非常强大的部分。 通过分离对项目集进行操作所需的控制以及对单个项目的解释和操作,它可以执行数据库查询处理中经常使用的各种集合匹配任务,并且可以对任意数据类型和数据模型执行这些任务。 溢出管理机制和策略的分离支持溢出避免以及混合散列溢出解决,如果需要的话可以递归地解决。 以类似的方式实现基于排序和散列的算法将允许对基于排序和散列的查询处理算法之间的二元性和权衡进行有意义的实验研究。 迭代器接口保证一对一匹配运算符可以轻松地与其他操作组合,包括尚未设计的新迭代器。

One-to-Many Match: While the one-to-one match operator includes an item in its output depending on a comparison of two items with one another, the one-to- many match operator compares each item with a number of other items to determine whether a new item is to be produced. A typical example is relational division, the re- lational algebra operator corresponding to universal quan- tification in relational calculus. There are two versions of relational division in Volcano. The first version, called native division, is based on sorting. The second version, called hash-division, utilizes two hash tables, one on the divisor and one on the quotient. An exact description of the two algorithms and altemative algorithms based on aggregate functions can be found in [16] along with ana- lytical and experimental performance comparisons and detailed discussions of two partitioning strategies for hash table overflow and multiprocessor implementations. W e are currently studying how to generalize these algo- rithms in a way comparable with the generalizations of aggregation and join, e.g., for a majority function.

一对多匹配:一对一匹配运算符根据两个项目之间的比较在其输出中包含一个项目,而一对多匹配运算符将每个项目与多个其他项目进行比较 以确定是否要生产新产品。 一个典型的例子是关系除法,关系代数运算符对应于关系微积分中的通用量化。 Volcano 中的关系划分有两个版本。 第一个版本称为本机划分,基于排序。 第二种版本称为哈希除法,它使用两个哈希表,一个用于除数,一个用于商。 两种算法和基于聚合函数的替代算法的准确描述可以在[16]中找到,以及分析和实验性能比较以及哈希表溢出和多处理器实现的两种分区策略的详细讨论。 我们目前正在研究如何以与聚合和连接的泛化相当的方式来泛化这些算法,例如,对于多数函数.

IV. EXTENSIBILITY

A number of database research efforts strive for exten- sibility, e.g., EXODUS, GENESIS, Postgres, Starburst, DASDBS [30], Cactis [24], and others. Volcano is a very open query evaluation architecture that provides easy ex- tensibility. Let us consider a number of frequently pro- posed database extensions and how they can be accom- modated in Volcano.

许多数据库研究工作都致力于可扩展性,例如 EXODUS、GENESIS、Postgres、Starburst、DASDBS [30]、Cactis [24] 等。 Volcano 是一个非常开放的查询评估架构,提供简单的可扩展性。 让我们考虑一些经常提出的数据库扩展以及如何将它们容纳在 Volcano 中。

First, when extending the object type system, e.g., with a new abstract data type (ADT) like date or box, the Volcano software is not affected at all because it does not provide a type system for objects. All manipulation of and calculation based on individual objects is performed by support functions. To a certain extent, Volcano is incomplete (it is not a database system), but by separating set processing and instance interpretation and providing a well-defined interface between them, Volcano is inherently extensible on the level of instance types and semantics.

首先,当扩展对象类型系统时,例如使用日期或框等新的抽象数据类型(ADT),Volcano 软件根本不受影响,因为它不提供对象的类型系统。 所有基于单个对象的操作和计算都是由支持函数执行的。 在某种程度上,Volcano 是不完整的(它不是一个数据库系统),但是通过将集合处理和实例解释分开并在它们之间提供定义良好的接口,Volcano 在实例类型和语义层面上具有本质上的可扩展性。

As a rule, data items that are transferred between operators using some next iterator procedure are records. For an extensible or object-oriented database system, this would be an unacceptable problem and limitation. The solution to be used in Volcano is to pass only the root component (record) between operators after loading and fixing necessary component records in the buffer and suit- ably swizzling inter-record pointers. Very simple objects can be assembled in Volcano with the functional join op- erator. Generalizations of this operator are necessary for object-oriented o r non-first-normal-form database sys- tems, but can be included in Volcano without difficulty. In fact, a prototype for such an assembly operator has been built [ 2 6 ] for use in the revelation object - oriented database systems project [151.

通常,使用某个下一个迭代器过程在运算符之间传输的数据项是记录。 对于可扩展或面向对象的数据库系统,这将是一个不可接受的问题和限制。 Volcano 中使用的解决方案是在缓冲区中加载和修复必要的组件记录并适当混合记录间指针后,仅在运算符之间传递根组件(记录)。 非常简单的对象可以通过函数连接运算符在 Volcano 中组装。 该运算符的推广对于面向对象或非第一范式数据库系统是必要的,但可以毫无困难地包含在 Volcano 中。 事实上,这样一个汇编操作符的原型已经被构建出来了[2 6],用于revelation 面向对象的数据库系统项目[151]。

Second, in order to add new functions on individual objects or aggregate functions, e.g., geometric mean, to the database and query processing system, the appropriate support function is required and passed to a query pro- cessing routine. In other words, the query processing rou- tines are not affected by the semantics of the support func- tions as long as interface and return values are correct. The reason Volcano software is not affected by extensions of the functionality on individual objects is that Volcano’s software only provides abstractions and implementations for dealing with and sequencing sets of objects using streams, whereas the capabilities for interpreting and ma- nipulating individual objects are imported in the form of support functions.

其次,为了向数据库和查询处理系统添加单个对象或聚合函数的新函数(例如几何平均值),需要适当的支持函数并将其传递给查询处理例程。 换句话说,只要接口和返回值正确,查询处理例程就不会受到支持函数语义的影响。 Volcano 软件不受单个对象功能扩展影响的原因是,Volcano 软件仅提供使用流处理和排序对象集的抽象和实现,而解释和操作单个对象的功能是在 Volcano 软件中导入的。 支持功能的形式。

Third, in order to incorporate a new access method, e.g., multidimensional indices in form of R-trees [22], appropriate iterators have to be defined. Notice that it makes sense to perform not only retrieval but also main- tenance of storage structures in the form of iterators. For example, if a set of items defined via a predicate (selec- tion) needs to be updated, the iterator or query tree im- plementing the selection can “feed” its data into a main- tenance iterator. The items fed into the maintenance operator should include a reference to the part of the storage structure to be updated, e.g., a RID or a key, and appropriate new values if they have been computed in the selection, e.g., new salaries from old salaries. Updating multiple structures (multiple indices) can be organized and executed very efficiently using nested iterators, i.e., a query evaluation plan. Furthermore, if ordering makes maintenance more efficient as for B-trees, an ordering or sort iterator can easily be included in the plan. In other words, it makes sense to think of plans not only as query plans used in retrieval but also as “update plans” or com- binations of retrieval and update plans. The stream con- cept is very open; in particular, anonymous inputs shield existing query processing modules and the new iterators from one another.

第三,为了合并新的访问方法,例如 R 树 [22] 形式的多维索引,必须定义适当的迭代器。 请注意,不仅以迭代器的形式执行检索,而且还执行存储结构的维护都是有意义的。 例如,如果需要更新通过谓词(选择)定义的一组项目,则实现选择的迭代器或查询树可以将其数据“馈送到”维护迭代器中。 馈入维护操作符的项目应包括对要更新的存储结构部分的引用,例如,RID或密钥,以及适当的新值(如果它们已在选择中计算),例如,从旧工资中计算出新工资 。 使用嵌套迭代器(即查询评估计划)可以非常有效地组织和执行更新多个结构(多个索引)。 此外,如果排序使 B 树的维护更加高效,则可以轻松地将排序或排序迭代器包含在计划中。 换句话说,将计划不仅视为检索中使用的查询计划,而且视为“更新计划”或检索和更新计划的组合是有意义的。 流的概念非常开放; 特别是,匿名输入可以屏蔽现有查询处理模块和新迭代器之间的相互影响。

Fourth, to include a new query processing algorithm in Volcano, e.g., an algorithm for transitive closure or nest and unnest operations for nested relations, the algorithm needs to be coded in the iterator paradigm. In other words, the algorithm implementation must provide open, next, and close procedures, and must use these procedures for its input stream or streams. After an algorithm has been brought into this form, its integration with Volcano is trivial. In fact, as the Volcano query processing software became more complex and complete, this was done a number of times. For example, the one-to-many match or division operators [16] were added without regard to the other operators, and when the early in-memory-only ver- sion of hash-based one-to-one match was replaced by the version with overflow management described above, none of the other operators or meta-operators had to be changed. Finally, a complex object assembly operator was added recently to Volcano [26].

第四,要在 Volcano 中包含新的查询处理算法,例如用于嵌套关系的传递闭包或嵌套和取消嵌套操作的算法,该算法需要以迭代器范例进行编码。 换句话说,算法实现必须提供 open、next 和 close 过程,并且必须将这些过程用于其一个或多个输入流。 当算法采用这种形式后,它与 Volcano 的集成就很简单了。 事实上,随着 Volcano 查询处理软件变得更加复杂和完整,这种情况已经被完成了很多次。 例如,添加了一对多匹配或除法运算符[16],而不考虑其他运算符,并且当基于哈希的一对一匹配的早期仅内存版本被替换为 在上述具有溢出管理的版本中,无需更改任何其他运算符或元运算符。 最后,Volcano 最近添加了一个复杂的对象组装操作符 [26]。

Extensibility can also be considered in a different con- text. In the long run, it clearly is desirable to provide an interactive front-end to make using Volcano easier. We are currently working on a two front-end, a nonoptimized command interpreter based on Volcano’s executable al- gebra and an optimized one based on a logical algebra or calculus language including query optimization imple- mented with a new optimizer generator. The translation between plans as produced by an optimizer and Volcano will be accomplished using a module that “walks” query evaluation plans produced by the optimizer and Volcano plans, i.e., state records, support functions, etc. We will also use the optimizing front-end as a vehicle for experi- mentation with dynamic query evaluation plans that are outlined in the next section.

还可以在不同的上下文中考虑可扩展性。 从长远来看,显然需要提供一个交互式前端以使 Volcano 的使用更加容易。 我们目前正在开发一种双前端,一种是基于 Volcano 可执行代数的非优化命令解释器,另一种是基于逻辑代数或微积分语言的优化命令解释器,包括使用新的优化器生成器实现的查询优化。 优化器和 Volcano 生成的计划之间的转换将使用一个模块来完成,该模块“遍历”优化器和 Volcano 计划生成的查询评估计划,即状态记录、支持函数等。我们还将使用优化前端 最终作为动态查询评估计划实验的工具,这些计划将在下一节中概述。

In summary, since Volcano is very modular in its de- sign, extensibility is provided naturally. It could be ar- gued that this is the case only because Volcano does not address the hard problems in extensibility. However, this argument does not hold. Rather, Volcano is only one component of a database system, namely the query exe- cution engine. Therefore, it addresses only a subset of the extensibility problems and ignores a different subset. As a query processing engine, it provides extensibility of its set of query processing algorithms, and it does so in a way that matches well with the extensibility as provided by query optimizer generators. It does not provide other da- tabase services and abstractions like a type system and type checking for the support functions since it is not an extensible database system. The Volcano routines assume that query evaluation plans and their support functions are correct. Their correctness has to be ensured before Vol- cano is invoked, which is entirely consistent with the gen- eral database systems concept to ensure correctness at the highest possible level, i.e., as soon as possible after a user query is parsed. The significance of Volcano as an exten- sible query evaluation system is that it provides a simple but very useful and powerful set of mechanisms for effi- cient query processing and that it can and has been used as a flexible research tool. Its power comes not only from the fact that it has been implemented following a few con- sistent design principles but also from its two meta-op- erators described in the next two sections.

总而言之,由于 Volcano 的设计非常模块化,因此自然地提供了可扩展性。 可以说,出现这种情况只是因为 Volcano 没有解决可扩展性方面的难题。 然而,这个论点并不成立。 相反,Volcano 只是数据库系统的一个组件,即查询执行引擎。 因此,它仅解决可扩展性问题的一个子集并忽略不同的子集。 作为查询处理引擎,它提供了查询处理算法集的可扩展性,并且它的实现方式与查询优化器生成器提供的可扩展性很好地匹配。 它不提供其他数据库服务和抽象,例如类型系统和支持功能的类型检查,因为它不是可扩展的数据库系统。 Volcano 例程假设查询评估计划及其支持函数是正确的。 在调用 Volcano 之前必须确保它们的正确性,这与一般数据库系统的概念完全一致,以确保尽可能高的级别的正确性,即在解析用户查询后尽快确保正确性。 Volcano 作为一个可扩展的查询评估系统的重要性在于,它为高效查询处理提供了一套简单但非常有用且强大的机制,并且它可以并且已经被用作灵活的研究工具。 它的力量不仅来自于它是按照一些一致的设计原则实现的,而且还来自于接下来两节中描述的两个元运算符。

V. DYNAMIC QUERY EVALUATION PLANS

In most database systems, a query embedded in a program written in a conventional programming language is opti- mized when the program is compiled. The query opti- mizer must make assumptions about the values of the pro- gram variables that appear as constants in the query and the data in the database. These assumptions include that the query can be optimized realistically using guessed “typical” values for the program variables and that the database will not change significantly between query op- timization and query evaluation. The optimizer must also anticipate the resources that can be committed to query evaluation, e.g., the size of the buffer or the number of processors. The optimality of the resulting query evalua- tion plan depends on the validity of these assumptions. If a query evaluation plan is used repeatedly over an ex- tended period of time, it is important to determine when reoptimization is necessary. We are working on a scheme in which reoptimization can be avoided by using a new technique called dynamic query evaluation plans [I **71.**’

在大多数数据库系统中,嵌入在用传统编程语言编写的程序中的查询在程序编译时被优化。 查询优化器必须对查询中作为常量出现的程序变量的值和数据库中的数据做出假设。 这些假设包括可以使用程序变量的猜测“典型”值来实际优化查询,并且数据库在查询优化和查询评估之间不会发生显着变化。 优化器还必须预测可用于查询评估的资源,例如缓冲区的大小或处理器的数量。 结果查询评估计划的最优性取决于这些假设的有效性。 如果在较长时间内重复使用查询评估计划,则确定何时需要重新优化非常重要。 我们正在研究一种方案,通过使用一种称为动态查询评估计划的新技术来避免重新优化[I 71。”

Volcano includes a choose-plan operator that allows re- alization of both multiplan access modules and dynamic plans. In some sense, it is not an operator as it does not perform any data manipulations. Since it provides control for query execution it is a metu-operator. This operator provides the same open-next-close protocol as the other operators and can therefore be inserted into a query plan at any location. The open operation decides which of sev- eral equivalent query plans to use and invokes the open operation for this input. Open calls upon a support func- tion for this policy decision, passing it the bindings pa- rameter described above. The next and close operations simply call the appropriate operation for the input chosen during open.

Volcano 包括一个选择计划运算符,允许实现多计划访问模块和动态计划。 从某种意义上说,它不是一个运算符,因为它不执行任何数据操作。 由于它提供对查询执行的控制,因此它是一个元运算符。 该运算符提供与其他运算符相同的打开-下一个-关闭协议,因此可以插入到查询计划的任何位置。 open 操作决定使用几个等效查询计划中的哪一个,并为此输入调用 open 操作。 Open 调用此策略决策的支持函数,并向其传递上述绑定参数。 接下来和关闭操作只需为打开期间选择的输入调用适当的操作。

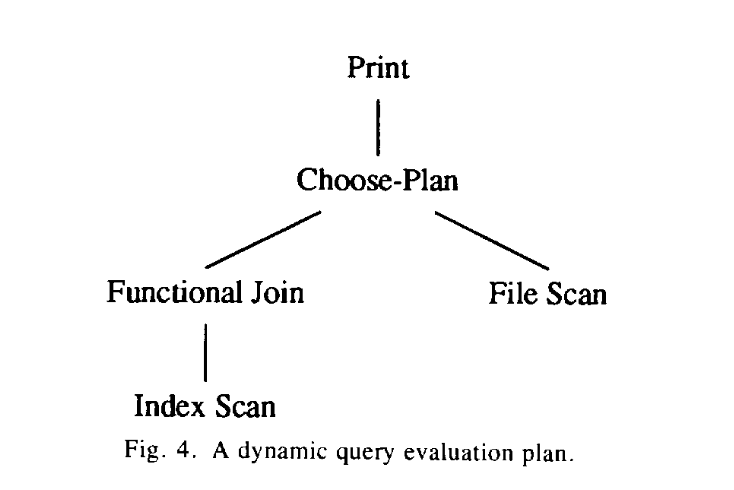

Fig. 4 shows a very simple dynamic plan. Imagine a selection predicate controlled by a program variable. The index scan and functional join can be much faster than the file scan, but not when the index is nonclustering and a large number of items must be retrieved. Using the plan of Fig. 4, however, the optimizer can prepare effectively for both cases, and the application program using this dy- namic plan will perform well for any predicate value.

图 4 显示了一个非常简单的动态计划。 想象一下由程序变量控制的选择谓词。 索引扫描和函数连接可以比文件扫描快得多,但当索引是非聚集的并且必须检索大量项目时则不然。 然而,使用图 4 的计划,优化器可以有效地为这两种情况做好准备,并且使用此动态计划的应用程序对于任何谓词值都将表现良好。

The choose-plan operator allows considerable flexibil- ity. If only one choose-plan operator is used as the top of a query evaluation plan, it implements a multiplan access module. If multiple choose-plan operators are included in a plan, they implement a dynamic query evaluation plan. Thus, all forms of dynamic plans identified in [17] can be realized with one simple and effective mechanism. Note that the choose-plan operator does not make the policy decision concerning which of several plans to execute; it only provides the mechanism. The policy is imported us- ing a support function. Thus, the decision can be made depending on bindings for query variables (e.g., program variables used as constants in a query predicate), on the resource and contention situation (e.g., the availability of processors and memory), other considerations such as user priority, or all of the above.

选择计划运算符具有相当大的灵活性。 如果仅使用一个选择计划运算符作为查询评估计划的顶部,则它会实现多计划访问模块。 如果一个计划中包含多个选择计划运算符,它们将实现动态查询评估计划。 因此,[17]中确定的所有形式的动态计划都可以通过一种简单而有效的机制来实现。 请注意,选择计划操作员并不做出有关执行多个计划中哪一个的策略决定; 它仅提供机制。 该策略是使用支持功能导入的。 因此,可以根据查询变量的绑定(例如,在查询谓词中用作常量的程序变量)、资源和争用情况(例如,处理器和内存的可用性)、其他考虑因素(例如用户优先级)来做出决定 ,或以上全部。

The choose-plan operator provides significant new freedom in query optimization and evaluation with an ex- tremely small amount of code. Since it is compatible with the query processing paradigm, its presence does not af- fect the other operators at all, and it can be used in a very flexible way. The operator is another example for Vol- cano’s design principle to provide mechanisms to imple- ment a multitude of policies. We used the same philoso- phy when designing and implementing a scheme for parallel query evaluation.

选择计划运算符以极少量的代码为查询优化和评估提供了显着的新自由度。 由于它与查询处理范例兼容,因此它的存在根本不会影响其他运算符,并且可以以非常灵活的方式使用。 运营商是 Volcano 设计原则的另一个例子,它提供了实施多种策略的机制。 在设计和实现并行查询评估方案时,我们使用了相同的理念。

VI. MULTIPROCESSOR QOURERY EVALUATION

A large number of research and development projects have shown over the last decade that query processing in relational database systems can benefit significantly from parallel algorithms. The main reasons parallelism is rel- atively easy to exploit in relational query processing sys- tems are 1) query processing is performed using a tree of operators that can be executed in separate processes and processors connected with pipelines (inter-operator par- allelism) and 2) each operator consumes and produces sets that can be partitioned or fragmented into disjoint subsets to be processed in parallel (intra-operator parallelism). Fortunately, the reasons parallelism is easy to exploit in relational systems does not require the relational data model per se, only that queries be processed as sets of data items in a tree of operators. These are exactly the assumptions made in the design of Volcano, and it was therefore logical to parallelize extensible query process- ing in Volcano.

过去十年中的大量研究和开发项目表明,关系数据库系统中的查询处理可以从并行算法中受益匪浅。 在关系查询处理系统中并行性相对容易利用的主要原因是:1)查询处理是使用运算符树执行的,该运算符树可以在与管道连接的单独进程和处理器中执行(运算符间并行性) 2) 每个运算符消耗并生成可以被分区或分段为不相交子集以进行并行处理的集合(运算符内并行性)。 幸运的是,并行性在关系系统中易于利用的原因并不需要关系数据模型本身,只需要将查询作为运算符树中的数据项集进行处理即可。 这些正是 Volcano 设计中所做的假设,因此在 Volcano 中并行化可扩展查询处理是合乎逻辑的。

When Volcano was ported to a multiprocessor machine, it was desirable to use all single-process query processing code existing at that point without any change.The result is very clean, self-scheduling parallel processing. We call this novel approach the operator model of parallelizing a query evaluation engine [20].*In this model, all parallelism issues are localized in one operator that uses and provides the standard iterator interface to the operators above and below in a query tree.

当 Volcano 被移植到多处理器机器上时,希望使用当时存在的所有单进程查询处理代码而不进行任何更改。结果是非常干净的、自调度的并行处理。 我们将这种新颖的方法称为并行化查询评估引擎的运算符模型[20]。*在此模型中,所有并行问题都集中在一个运算符中,该运算符使用标准迭代器接口并向查询树中的上方和下方运算符提供标准迭代器接口。

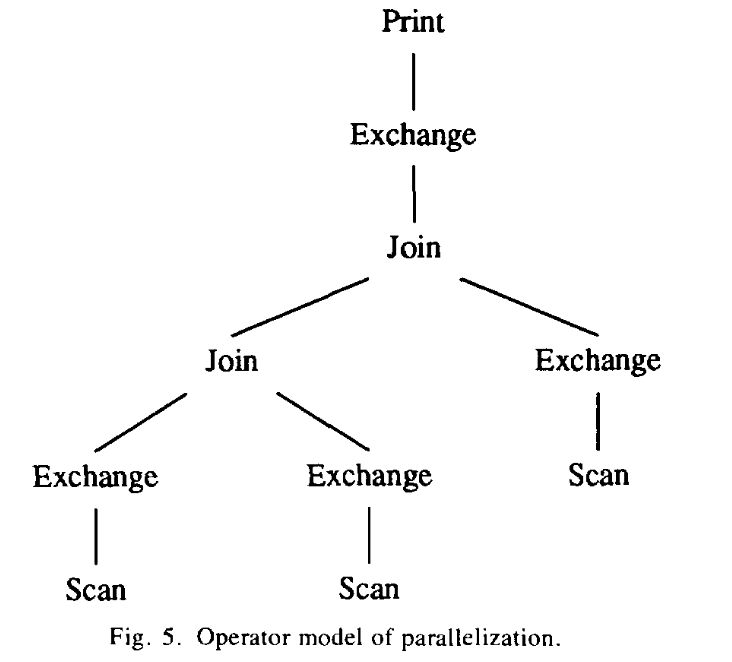

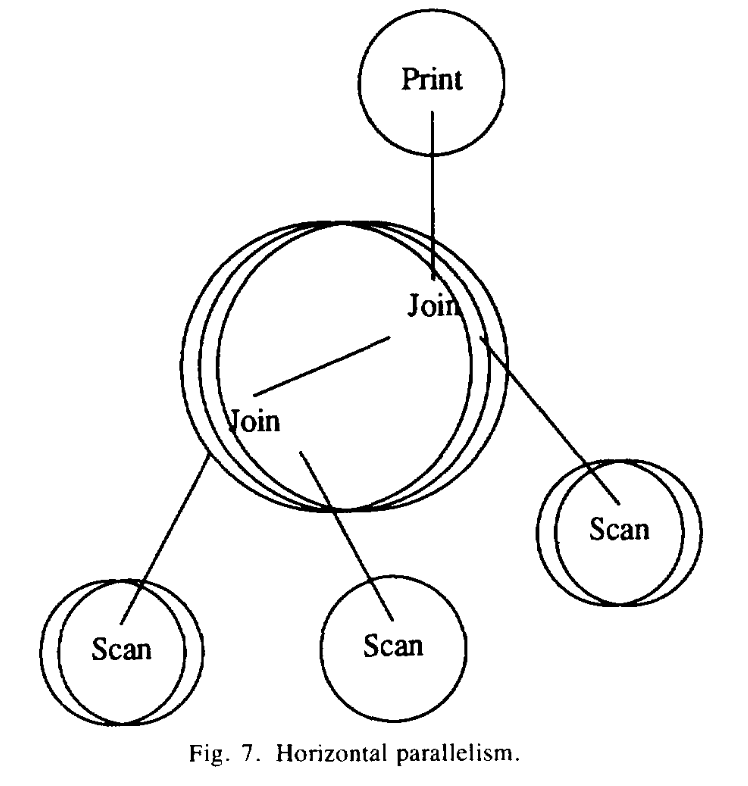

The module responsible for parallel execution and syn- chronization is called the exchange iterator in Volcano. Notice that it is an iterator with open, next, and close pro- cedures; therefore, it can be inserted at any one place or at multiple places in a complex query tree. Fig. 5 shows a complex query execution plan that includes data pro- cessing operators, i.e., file scans and joins, and exchange operators. The next two figures will show the processes created when this plan is executed.

负责并行执行和同步的模块在 Volcano 中称为交换迭代器。 请注意,它是一个具有 open、next 和 close 过程的迭代器; 因此,它可以插入到复杂查询树中的任意一处或多处。 图 5 显示了一个复杂的查询执行计划,其中包括数据处理运算符,即文件扫描和连接以及交换运算符。 接下来的两幅图将显示执行该计划时创建的流程。

This section describes how the exchange iterator im- plements vertical and horizontal parallelism followed by discussions of alternative modes of operation of Vol- cano’s exchange operator and modifications to the file system required for multiprocess query evaluation. The description goes into a fair amount of detail since the ex- change operator adds significantly to the power of Vol- cano. In fact, it represents a new concept in parallel query execution that is likely to prove useful in parallelizing both existing commercial database products and extensible sin- gle-process systems. It is described here for shared-mem- ory systems only; considerations for the distributed-mem- ory version are outlined as future work in the last section of this paper.

本节介绍交换迭代器如何实现垂直和水平并行性,然后讨论 Volcano 交换运算符的替代操作模式以及对多进程查询评估所需的文件系统的修改。 由于交易所运营商显着增强了 Volcano 的功能,因此描述非常详细。 事实上,它代表了并行查询执行的一个新概念,可能在现有商业数据库产品和可扩展单进程系统的并行化方面很有用。 此处仅针对共享内存系统进行描述; 本文最后一部分概述了分布式内存版本的考虑因素作为未来的工作。

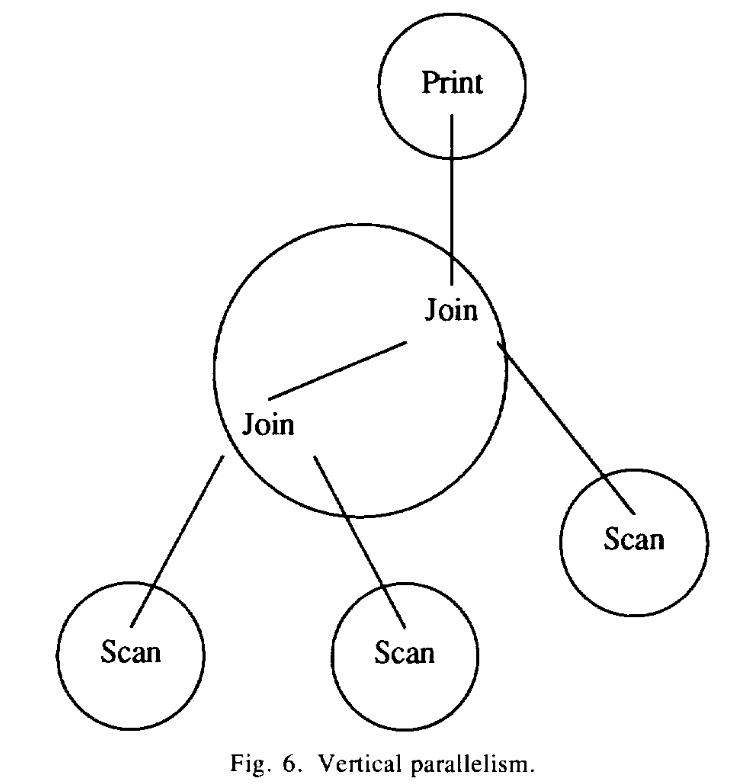

A. Vertical Parallelism 垂直并行性

The first function of exchange is to provide vertical parallelism or pipelining between processes. The open procedure creates a new process after creating a data structure in shared memory called a port for synchroni- zation and data exchange. The child process is an exact duplicate of the parent process. The exchange operator then takes different paths in the parent and child pro- cesses.

交换的第一个功能是在进程之间提供垂直并行性或流水线。 open 过程在共享内存中创建称为端口 的数据结构后创建一个新进程,用于同步和数据交换。 子进程是父进程的精确副本。 然后交换操作符在父进程和子进程中采取不同的路径。